SLAM Robot Navigation

SLAM Robot Navigation

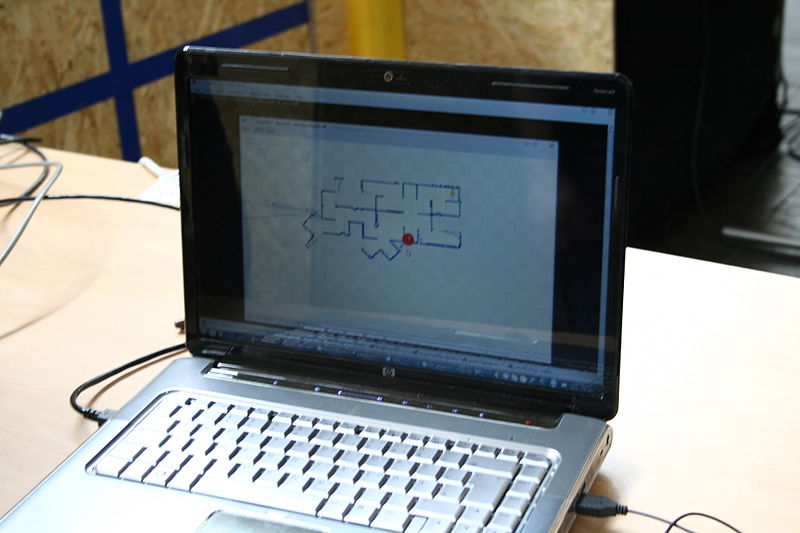

SLAM robot navigation. Now here’s something all of us could use for sure. SLAM (Simultaneous localization and mapping) is a technique used by robots and autonomous vehicles to build a map within an unknown environment, or to update a map within a known environment, while keeping track of their current location. Think instant and instantly updatable Google maps in your system.

One of the most widely researched subfields of robotics, SLAM robot navigation seems simple, but it’s deceptively so. Imagine a simple mobile robot, which in this case, is a set of wheels connected to a motor and a camera, complete with actuators or physical devices for controlling the speed and direction of the unit. Now imagine this robot being remotely propelled by a human operator to map inaccessible places. The actuators allow the robot to move around while the camera provides enough visual information for the operator to understand where surrounding objects are and how the robot is oriented in reference to them.

What the human operator is doing is an example of SLAM robot navigation. Mapping is basically determining the location of objects in the environment and localization is establishing the robot’s position with respect to these objects is. The challenge for researchers in the SLAM subfield of robotics is attempting to find a way for robots to do this autonomously, without any human assistance whatsoever.

Because SLAM robot navigation basically means building a model leading to a new map or repetitively improving an existing map while at the same time localizing the robot within that map, an inherent problem is created: The answers to the two characteristic questions cannot be delivered independently of each other.

It is simply too complex a task to estimate the robot's current location without an existing map or without a directional reference. Many people therefore refer to SLAM as a chicken or egg problem: An unbiased map is needed for localization while an accurate pose estimate is needed to build that map. Now imagine if a solution to the SLAM problem could be found, infinite mapping possibilities would then be well within reach.

Maps could be made in areas which are too dangerous or inaccessible to humans, like deep-sea environments or unstable structures. It would make robot navigation possible in places like space stations and other planets, removing the need for localization methods like GPS or man-made beacons. GPS is currently only accurate to within about one half of a meter, which is often more than enough to be the difference between successful mapping and getting lost. Man-made beacons, on the other hand, are expensive in terms of time and money.

SLAM robot navigation is a big but relatively new subfield of robotics. It was originally developed by Hugh Durrant-Whyte and John J. Leonard based on earlier work by Smith, Self and Cheeseman. It wasn’t until the mid 1980s that Smith and Durrant-Whyte developed a concrete representation of uncertainty in feature location, which was a major step in establishing the significance of finding a practical rather than a theoretical solution to robot navigation.

The paper provided a foundation for finding ways to deal with the errors associated with navigation. Soon thereafter another study proved the existence of a correlation between feature location errors due to errors in motion, which affect all feature locations.

There are other problems connected to SLAM robot navigation, as specific and detailed mapping is always complex. For example, if a map built using the measured distance and direction traveled by a robot has a set of inaccuracies, brought by inefficient sensors and additional ambient noise (distractions), then any features being added to the map will contain corresponding errors. Over time and motion, locating and mapping errors would then build cumulatively, inevitably distorting the map and the robot's ability to determine its actual location and move with sufficient accuracy. There are various techniques to compensate for errors, such as recognizing features that it has come across previously, and putting together recent parts of the map to make sure the two instances of that feature become one.

There are also new features and variants of the SLAM algorithm that have come out and continue to come out, such as MIT’s Atlas. MIT researchers have developed a topological approach to SLAM that allows a robot to map large scale environments by combining smaller maps. Applications for the technology range from Navy AUVs to flying robots that can map caves in Afganistan.

The CMU Robotics Institute describes a new variation that is commonly used in mobile robots called FastSLAM. This uses a particle filter that allows the robot to assimilate more landmarks into its internal map representation faster than traditional Robot Navigation SLAM. The FastSLAM algorithms were tested on a standard pickup truck that has been converted into an autonomous robot capable of speeds up to 90 Km/h.

RoboRealm, an application for use in computer vision, image analysis, and robotic vision systems also makes use of the SLAM algorithm with its AVM Navigator module. It’s a third party module that provides object recognition functionality, allowing one to program robots to recognize objects in the environment. The module also lets the human operator navigate the robot in relation to those objects. It has a Navigate mode, which is similar to Object Recognition, but provides variables that specify which direction the object is in relation to the robot.

Using these variables one can steer the robot towards a particular object as the algorithm attempts to align the position of a turret (with a camera fixed on top) and body of the robot to the center of the recognized object. If multiple objects are recognized, the object with the highest index (most recently learned object) is chosen. There is also the Nova gate mode, which is similar to the Navigate mode. This provides a visual stepping stone functionality by identifying 'gate' objects in succession. By sequentially identifying objects one can lead a robot along a path of visual markers. Once a route is complete, the robot can re-travel that route by identifying the same gates/objects and use these gates to steer the robot along the correct path.

Some variations include "vSLAM" (visual Simultaneous Localization and Mapping) that does localization and mapping with a single camera and dead reckoning, and "ratSLAM" a robot navigation system based on models of a rat's brain.

Seriously, I know a lot of human drivers who would benefit from SLAM robot navigation more than these robots.